About

Hi there, I am currently a postdoctoral researcher at Tongji University, jointly supervised by Prof. Guang Chen and Prof. Changjun Jiang. I earned my Ph.D. from Tongji University in 2024.

My research focuses on transfer learning and its applications in Embodied AI and AI4Science. I am particularly interested in developing robust and efficient algorithms for pattern recognition and their applications in computer vision, robotics, and drug discovery.

I am open to new opportunities and collaborations. If you are interested in my research, please feel free to contact me.

Recent News

2025-07

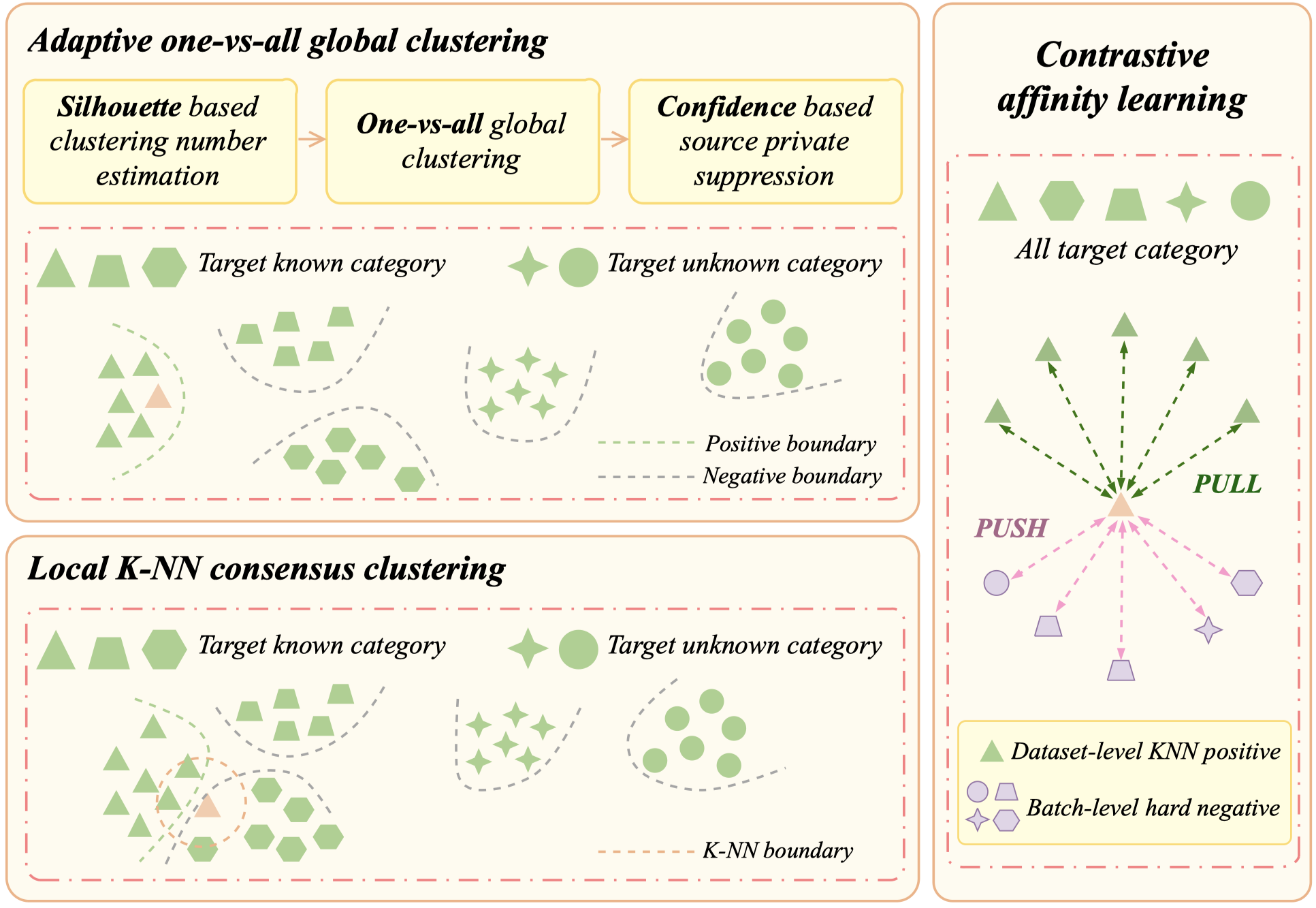

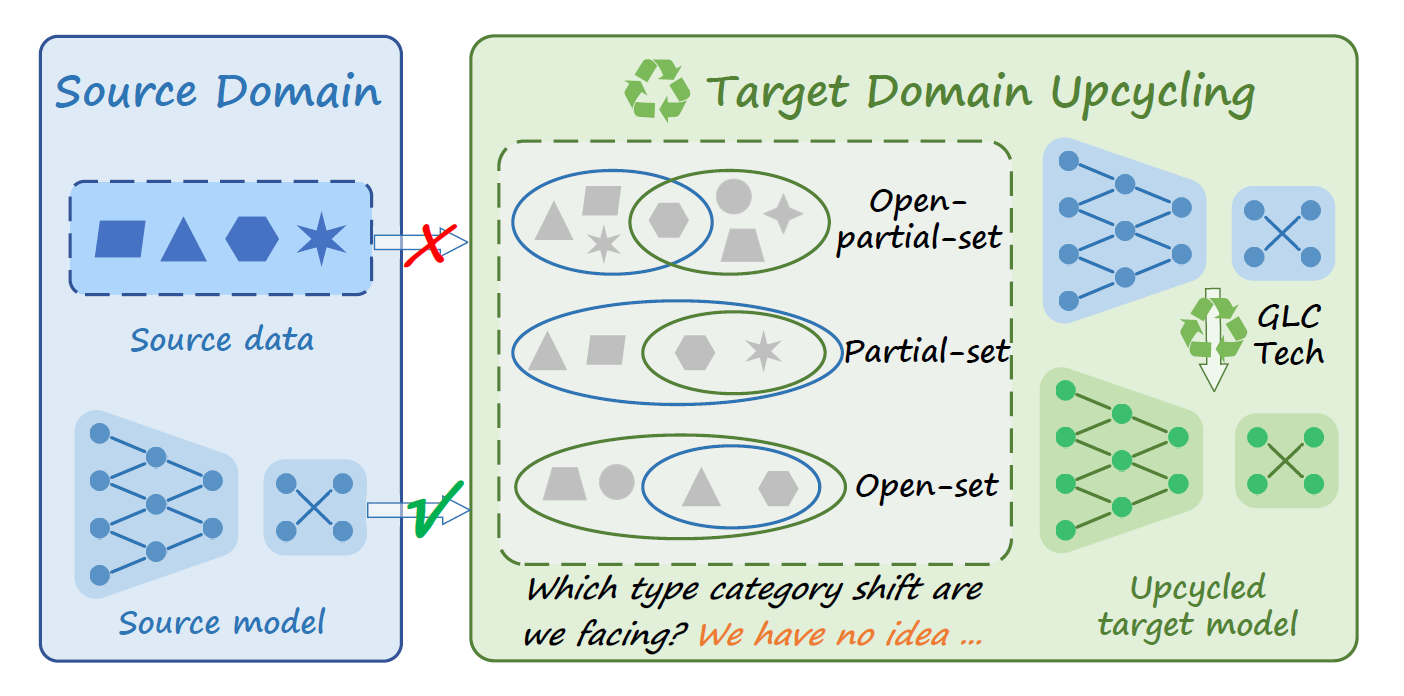

Our work GLC++, a substantial extension to GLC (CVPR 2023), is accepted by IEEE TPAMI.

2025-03

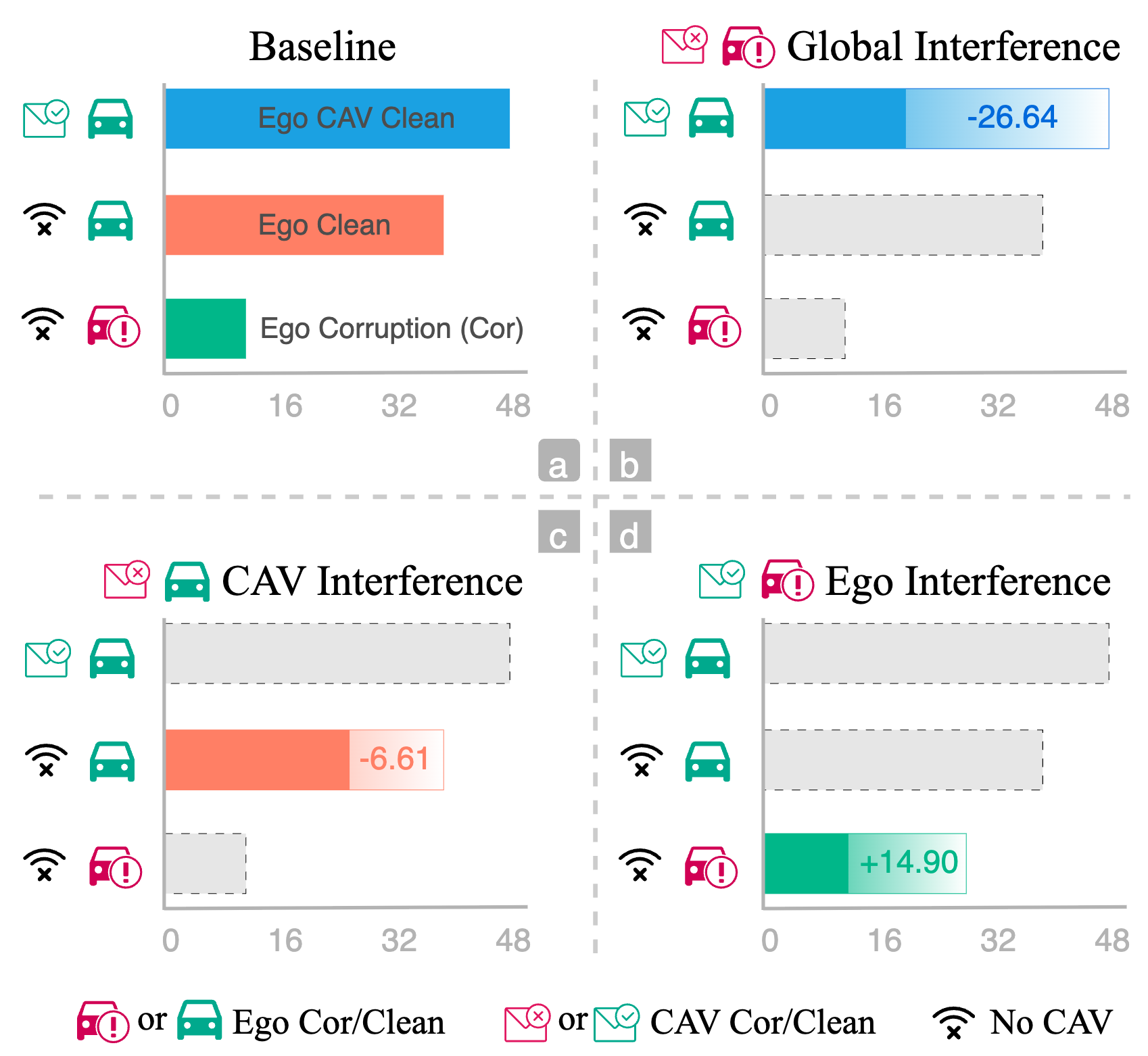

Our work RCP-Bench, a benchmark for evaluating robustness of collaborative perception, is accepted by CVPR 2025.

2025-01

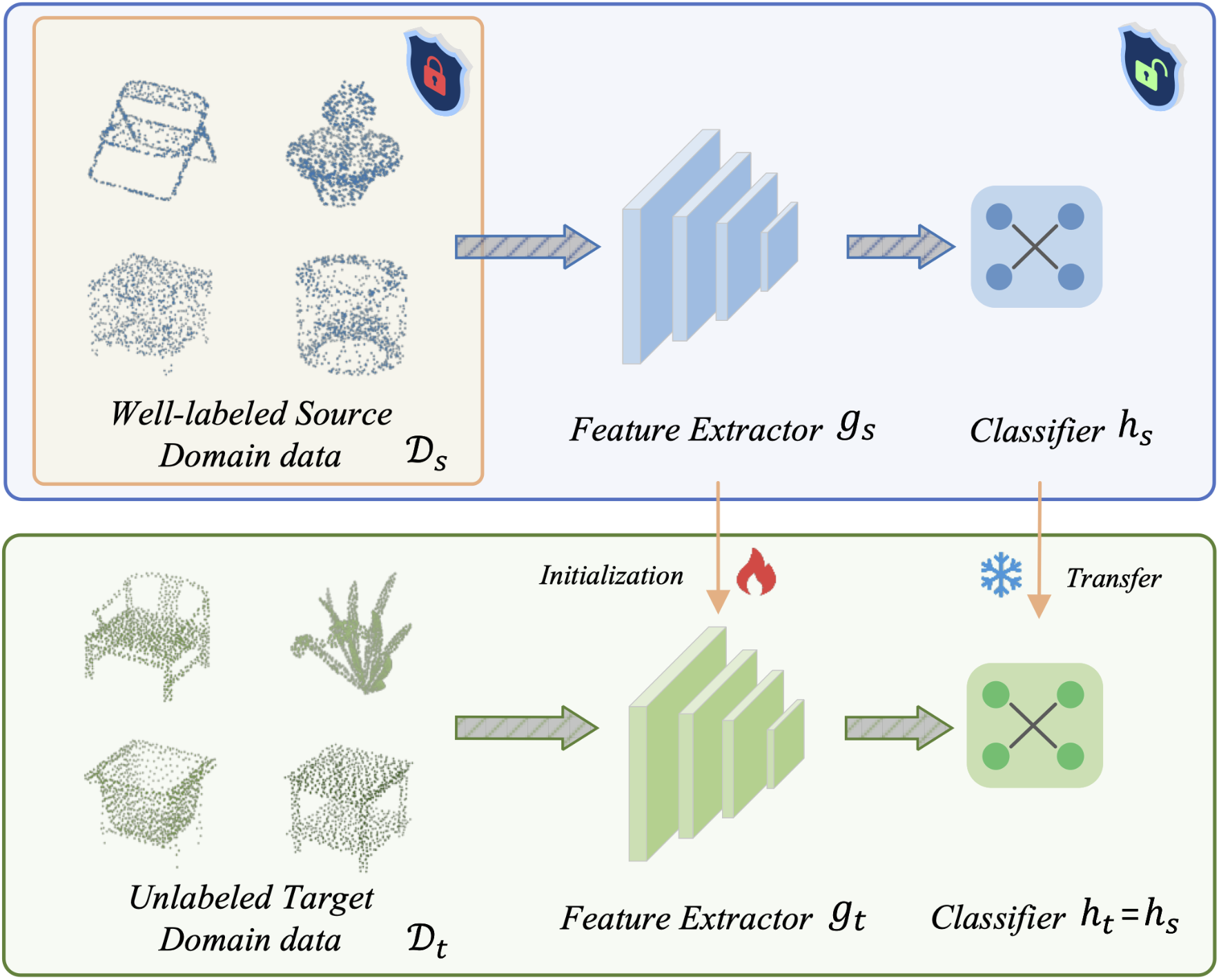

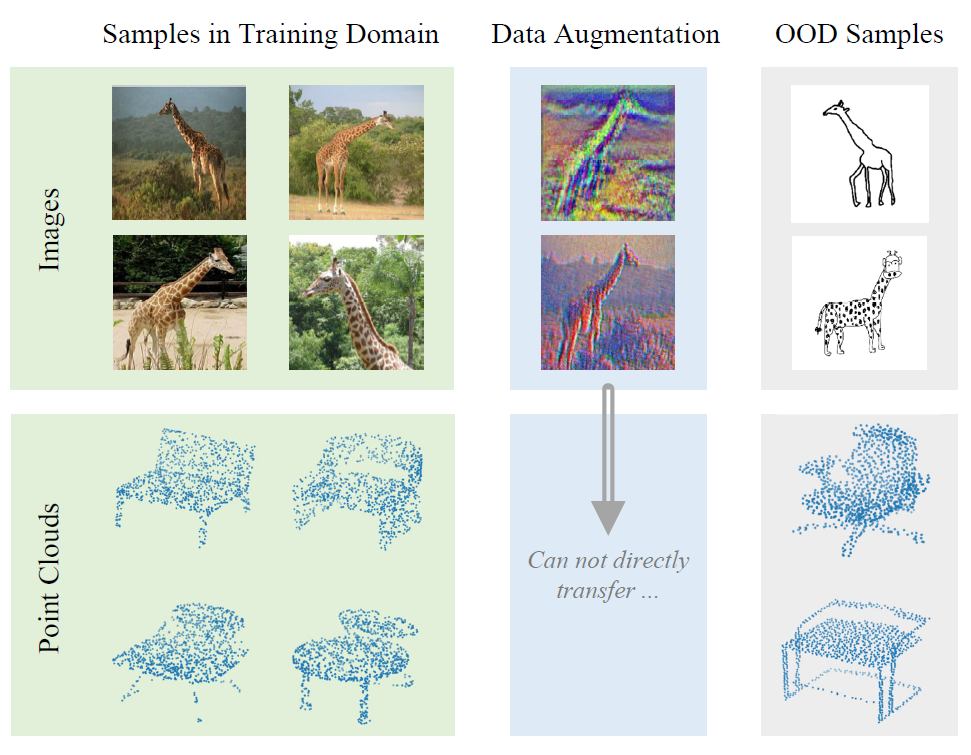

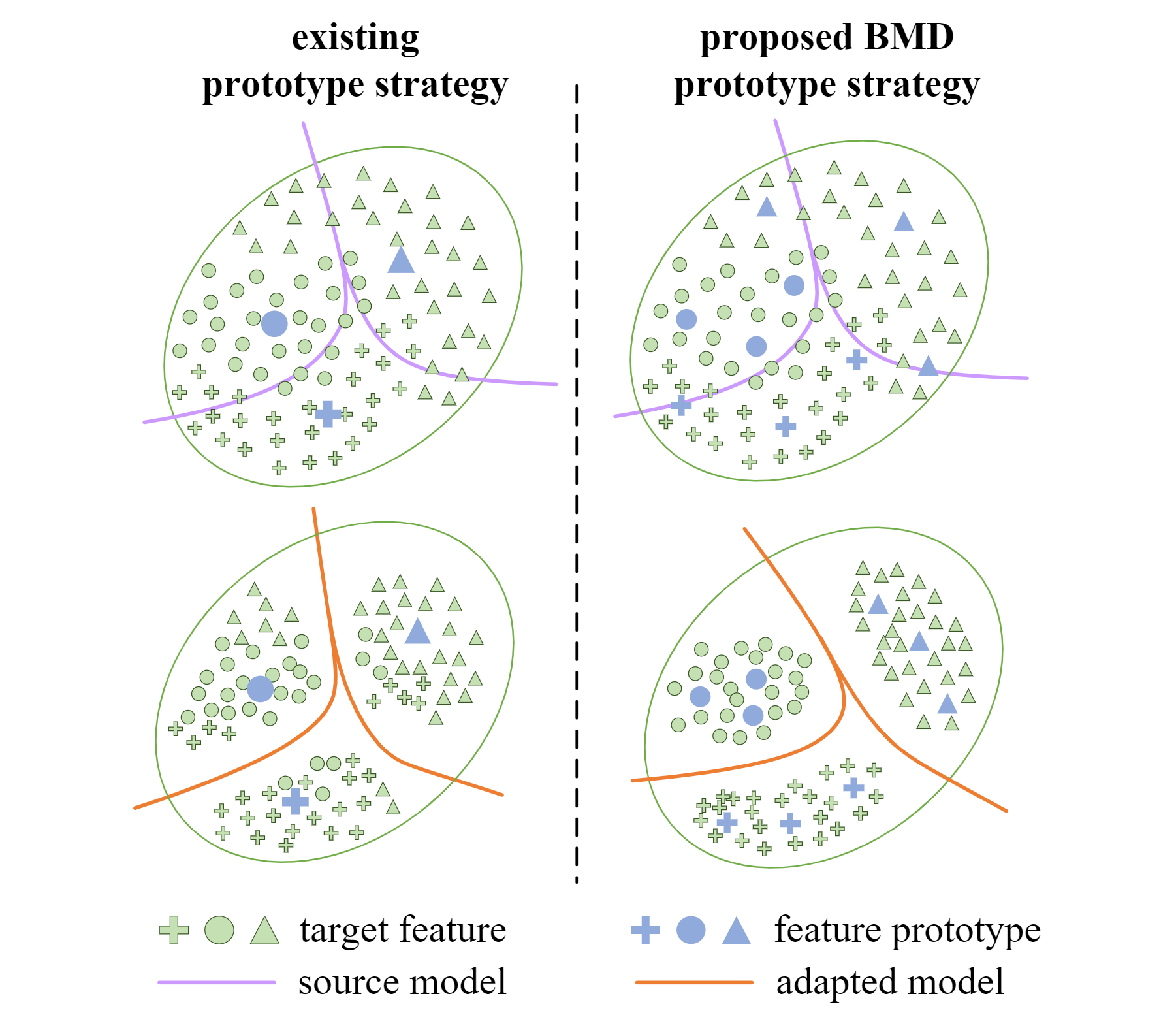

Our work BMD-v2, a substantial extension to BMD (ECCV 2022), is accepted by IJCV.

2024-12

We won the first prize (1/226) in the 2nd Global AI Drug Development Algorithm Competition.

2024-07

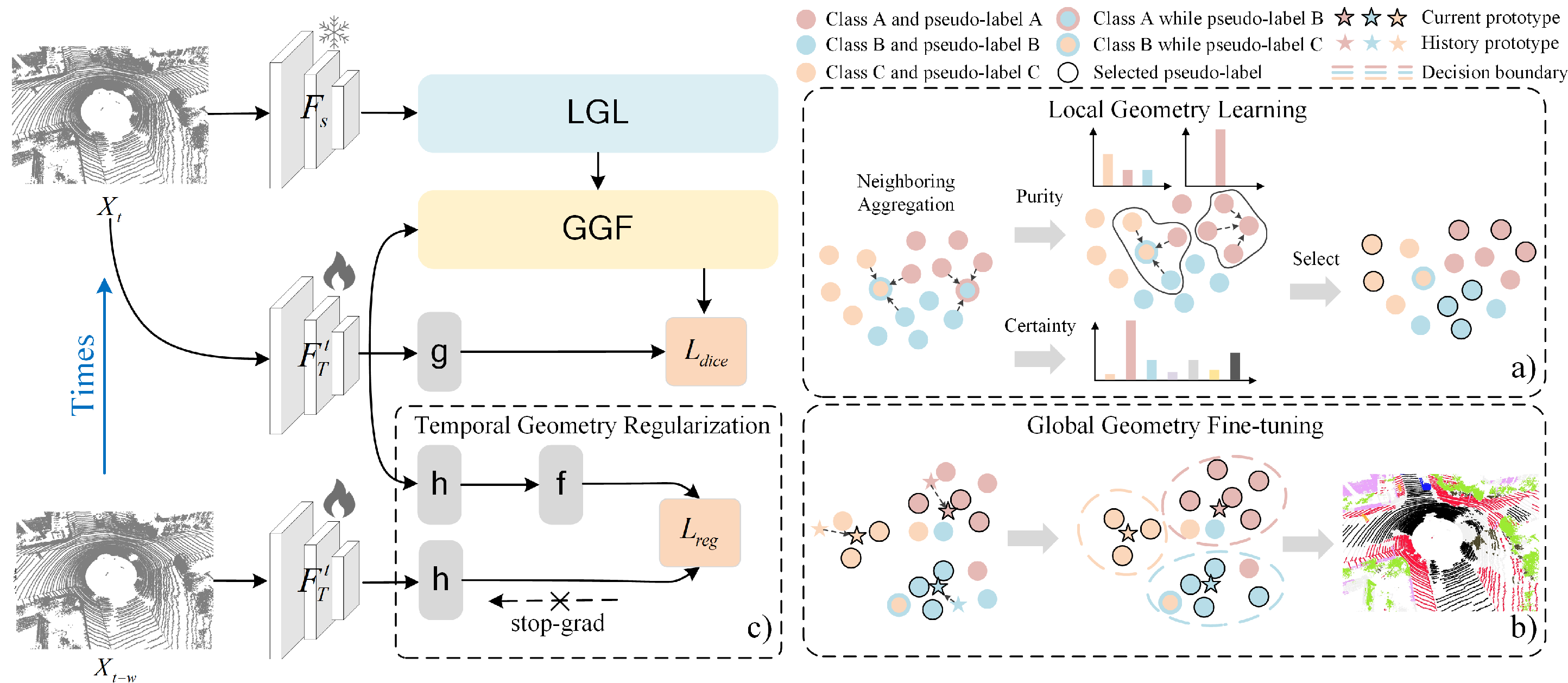

Our work HGL on test-time domain adaptation for segmentation is accepted by ECCV 2024 (Oral).

2024-02

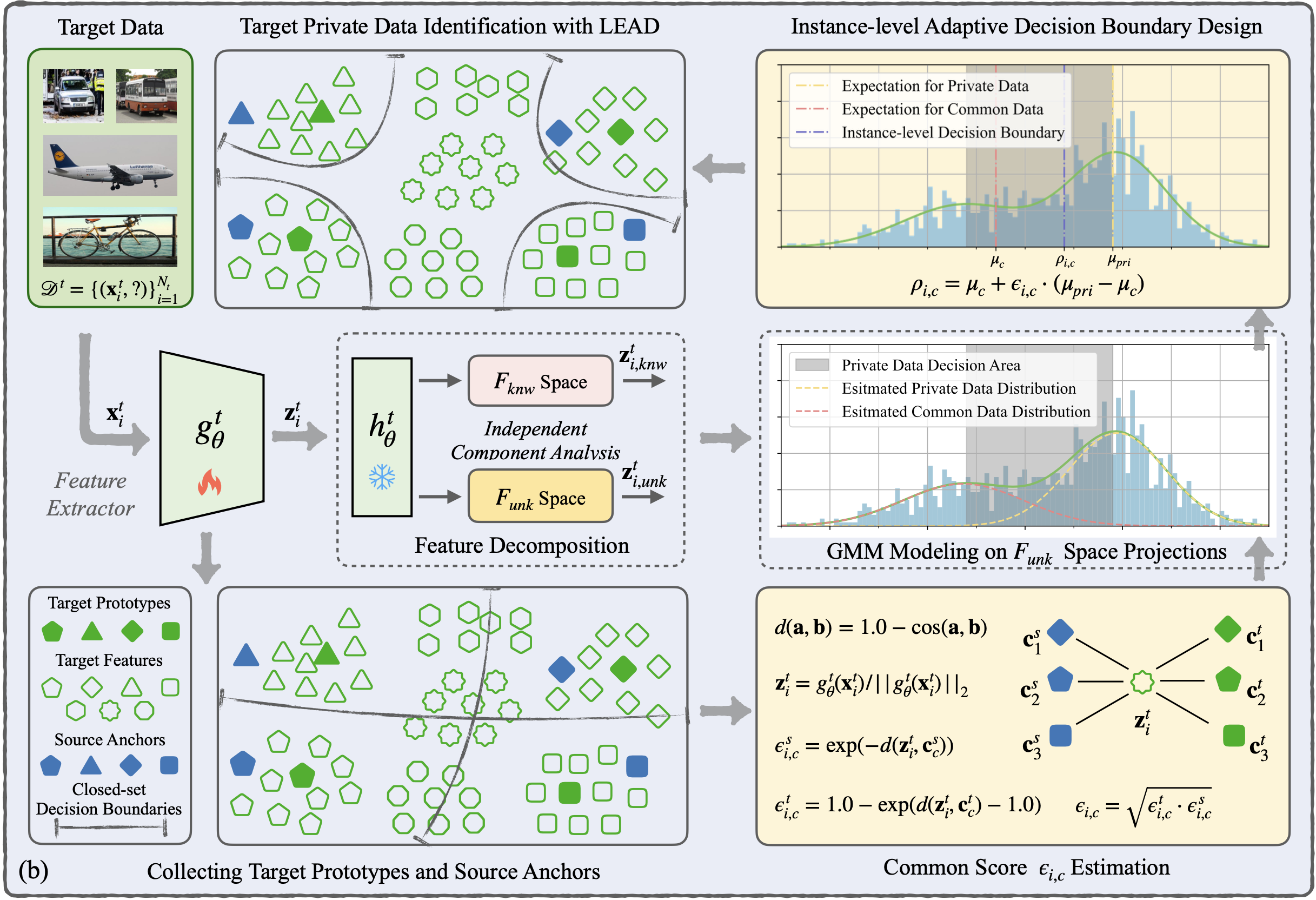

Our work LEAD on source-free universal domain adaptation is accepted by CVPR 2024.

Selected Publications

* indicates equal contribution

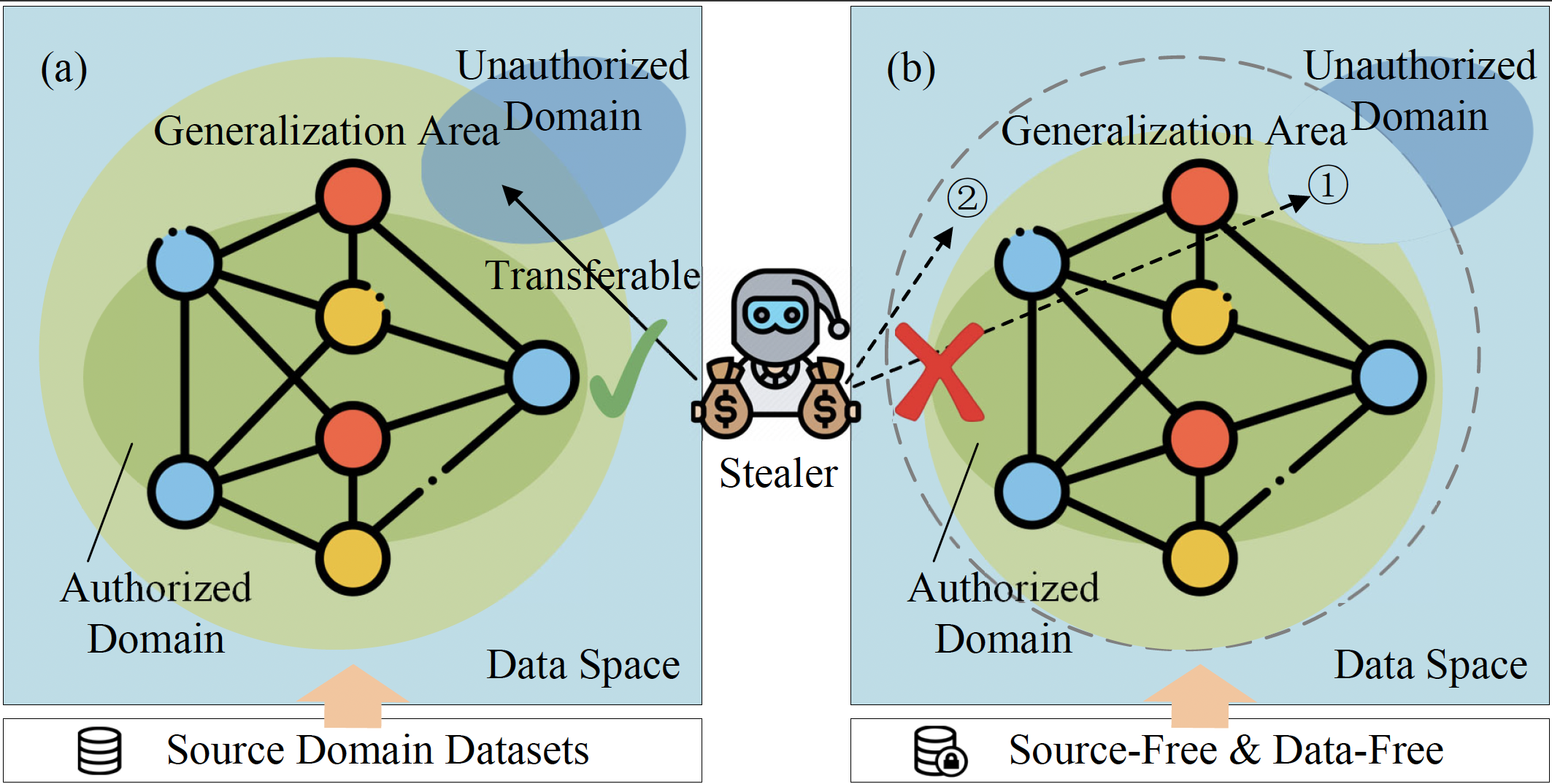

MAP: MAsk-Pruning for Source-Free Model Intellectual Property Protection

CVPR

IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR), 2024

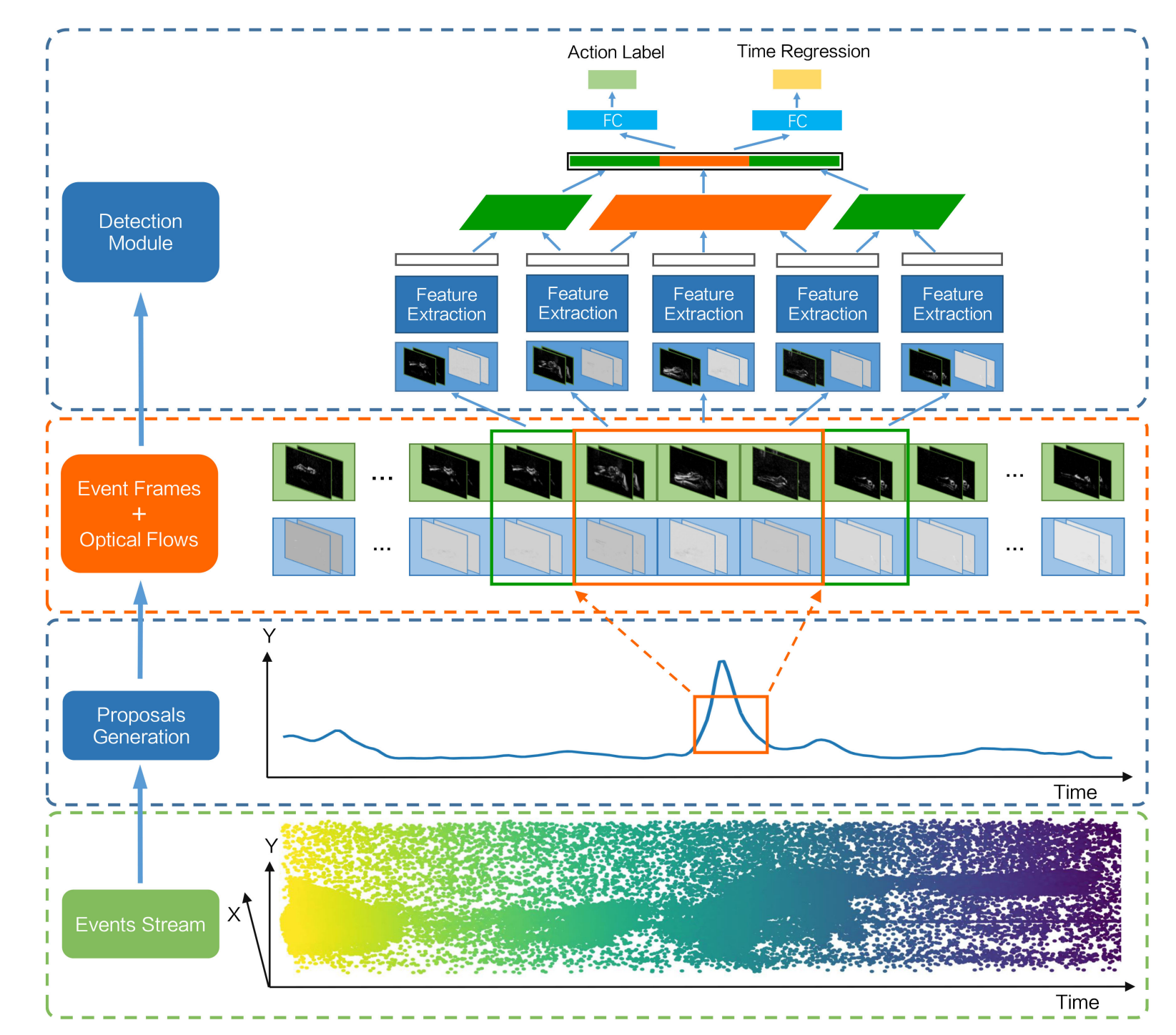

Neuromorphic Vision-based Fall Localization in Event Streams with Temporal–spatial Attention Weighted Network

T-CYB

IEEE Transactions on Cybernetics (T-CYB), 2022

Education & Experience

2024.12 - Present

Postdoctoral Researcher

Tongji University

Tongji University

2020.09 - 2024.11

Ph.D. in Automotive Engineering

Tongji University

Tongji University

2015.09 - 2020.07

B.S. in Automotive Engineering

Tongji University

Tongji University

Selected Honors & Awards

2025

Top Reviewer of NeurIPS 2025

2024

First Prize in the 2nd Global AI Drug Development Algorithm Competition (1/226)

2022, 2021

Outstanding Doctoral Student Scholarship, Tongji University

2020

Shanghai Outstanding Graduate

2019

BaoGang Scholarship (宝钢优秀学生奖学金 - 全国每年约500名)

Academic Services

Journal Reviewer

IEEE TPAMI, IJCV, IEEE TIP, IEEE TMM, IEEE TCSVT, IEEE RAL, ACM TOMM, ESWA, etc.

Conference Reviewer

CVPR, ICCV, ECCV, ICLR, NeurIPS, AAAI, WACV, ICRA, IROS, etc.